Proprietary Data is Leaking from Your Production Systems

When developers use AI APIs in backend services or CI/CD pipelines, they can inadvertently send sensitive information like proprietary source code and customer PII to public models without your knowledge.

Key Pains

Leaked Source Code

Backend services can send proprietary code snippets to AI models, risking your IP.

Exposed Customer Data

Customer PII from your production databases can be sent as context to AI services, violating privacy.

Zero Visibility

Encrypted TLS traffic from your servers to AI vendors is a black box, making it impossible to audit.

Visibility for Safe AI Adoption in Your Infrastructure

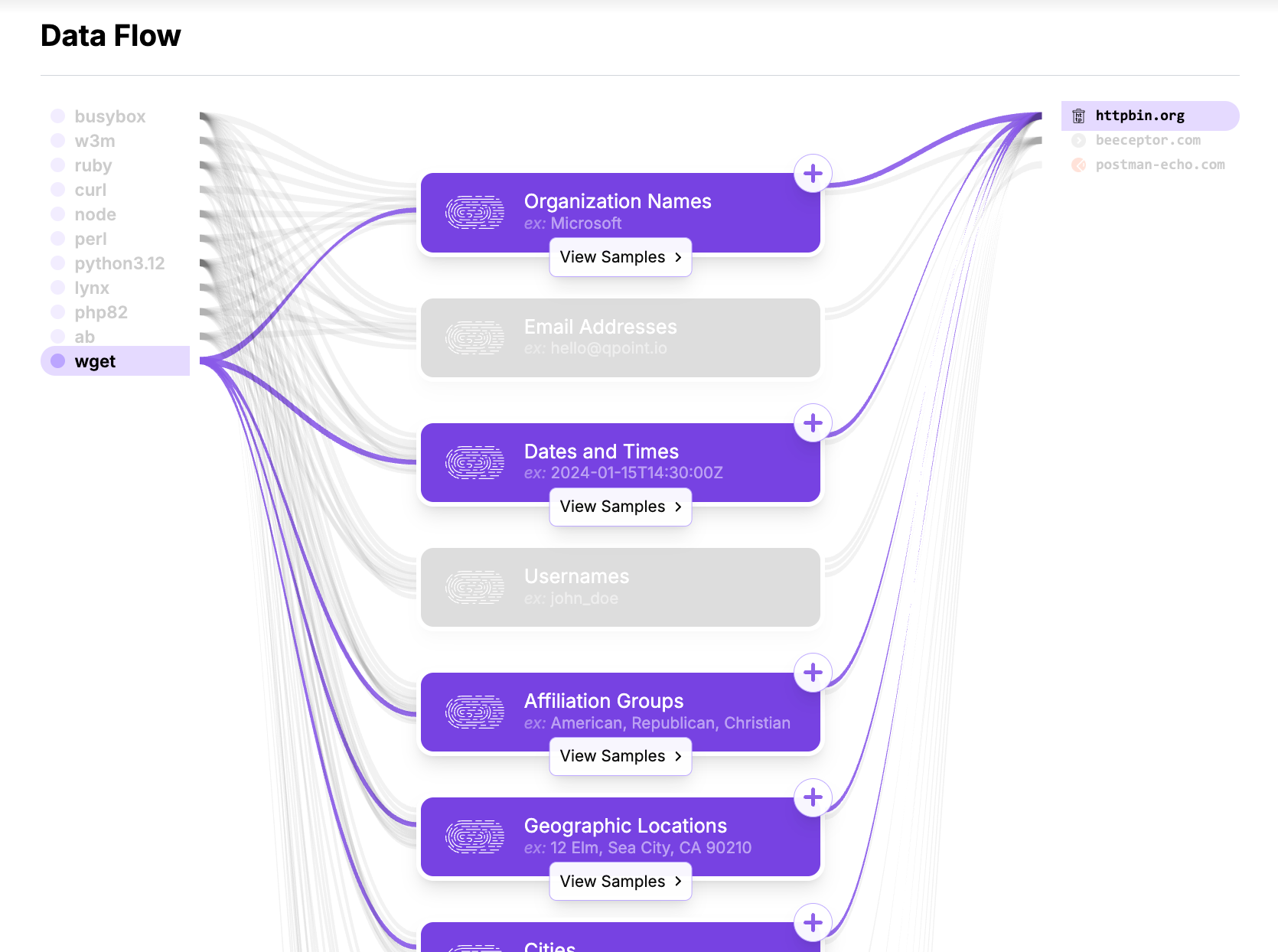

See Every AI Connection. Classify Every Payload.

Qpoint gives you the ground truth needed to embrace AI confidently. We show you exactly what data is being sent from your backend infrastructure to any AI service, before it's encrypted.

Key Capabilities

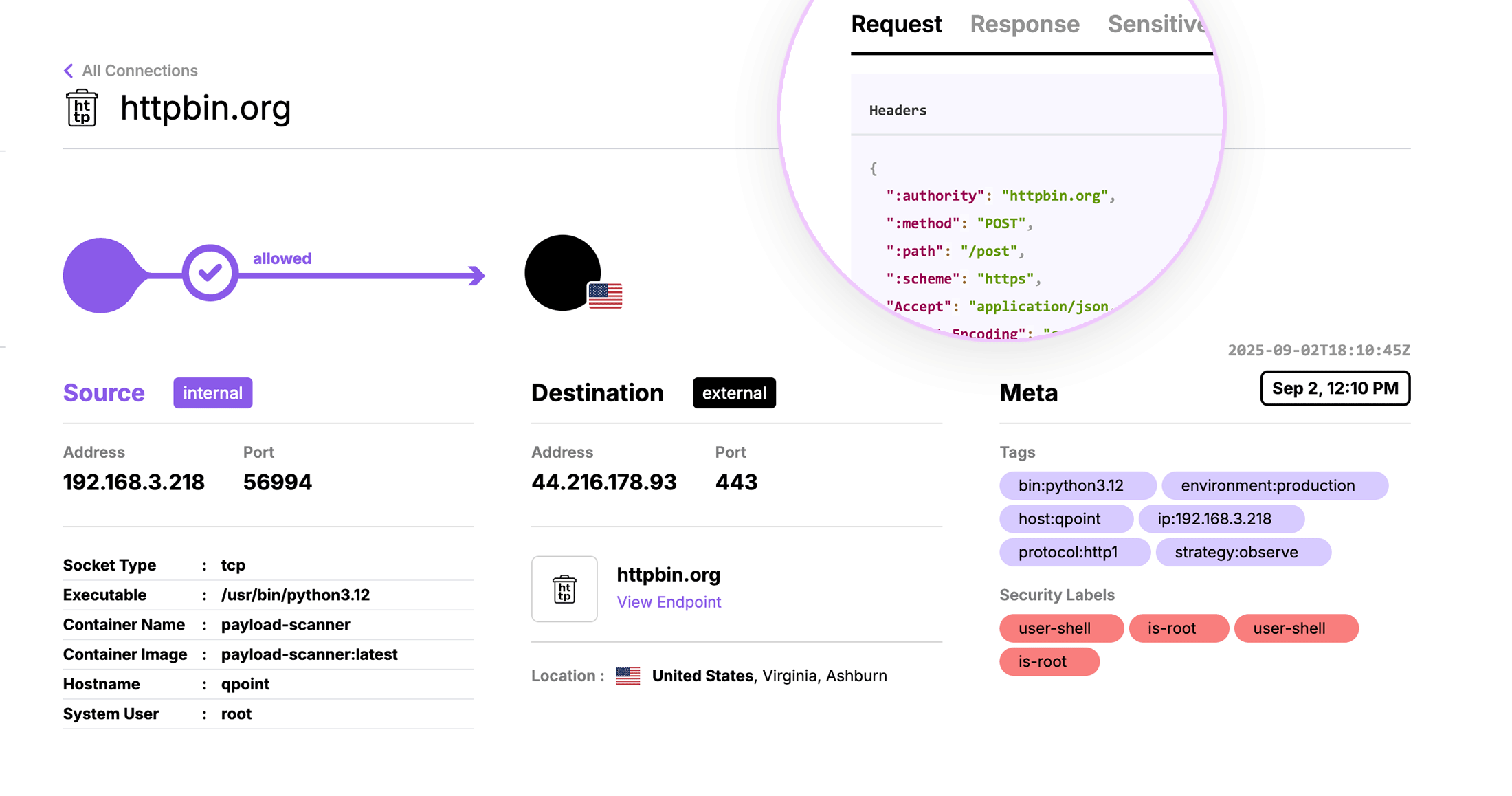

Identify Every AI Connection

Get a complete, real-time inventory of every backend process connecting to external AI APIs.

Classify Transmitted Data

See the exact contents of the payloads, with automatic classification of source code, PII, and credentials.

Build Safe Usage Policies

Use definitive evidence to create and enforce safe AI usage guidelines for your teams.

Link to the Source

Trace every AI-bound request back to the specific process, container, and host in your production environment.